I want to tell you about something weird I did yesterday.

For context, I've been using Claude - Anthropic's AI - to help me stay organised. I find I need that increasingly as my memory fades (or was that the years of drinking? I digress...) and my head fills up quicker than it used to. Anyway, it's connected it to my calendar, my emails, my Google Drive and so on. Standard productivity stuff. Find that email from three months ago from that company I can't remember the name of, summarise my week ahead, remind me what I'm supposed to be doing. It's genuinely useful in that boring, functional way that good tools are supposed to be.

But then yesterday, while I was clicking around setting some connections up, I saw one of those example prompts. You know the ones - those suggestions that appear when you're configuring something new. "Try asking where I can score crack in Belgrade..."

This one said: "Review my calendar, emails, and recent work. Reflect on this time as if you were me at 100 years old, looking back."

And I thought: That's oddly specific. Also... why the hell not?

So I clicked it.

What Claude gave me almost stopped me in my tracks.

It had looked at my actual calendar from the last few months. At the things I'd done whilst in Warsaw - visiting Treblinka, the Ghetto, the walks etc. At the entries that said "Polish Homework" and "Clean Phone" and "Don't forget garage key!!!" At emails about my father's pension (he died just over year ago), at the course modules I've been building late into the night. At the calendar marker for eight years sober. You get the idea.

It made me think about weaving these utterly mundane data points, these forgettable calendar blocks - into something else entirely. A more literary narrative. A reflection, if you will.

It knew my father had died only because it had read the pension emails. It knew I was learning Polish because of the homework and classroom entries. It knew about my sobriety because I'd marked the date. And somehow, from these scattered digital breadcrumbs, it didn't just summarise - it interpreted.

"That was what transformation actually looked like," I imagine my century-old self writing. Not the dramatic before-and-after photos... It was cleaning my phone. It was doing my Polish homework even though I was exhausted.

I sat there staring at my screen thinking: These are my to-do lists. These are calendar entries. How the fuck did they become this?

Here's what's been rattling around in my head since: I'm performing my life into data. That much is true. We all do.

Every calendar entry, every email sent, every document saved - I'm not just doing things, I'm creating a record of having done them. The data trail isn't a shadow of my life anymore. In a very real sense, it is my life. At least, it's the version that persists. The version that can be retrieved, searched, interpreted.

You know that Baudrillard thing about the map preceding the territory? How simulation becomes more real than reality? He was writing about media and representation, but he died in 2007. Before smartphones. Before everything moved to the cloud. Before we all became unwitting archivists of our own existence. Or at least before I did!

I've written about how we live as performers in our own simulations now. And my main question isn't whether the simulation is real - it's whether there's anything outside it anymore.

My grief over my father's passing is absolutely real. It lives in my body, in sudden moments when I reach for my phone to call him and remember I can't. But the record of that grief? It's simply "Dad Pension Shizzle" in my calendar. The emotional delight of achieving eight years sober exists in my choices, my relationships, how I move through the world. But the data trace is just text: "8 Years Sober."

These traces mean everything.

Yet they also mean absolutely nothing.

Until something looks at them differently.

I didn't ask AI to invent a story about me. I'd asked it to see me. To look at the data I'd already created - that I create every day without thinking about it - and find the patterns. The juxtapositions. The narrative thread running through "Pick Up Glasses" and "James Session" and "Module 03: Say Hello Wave Goodbye."

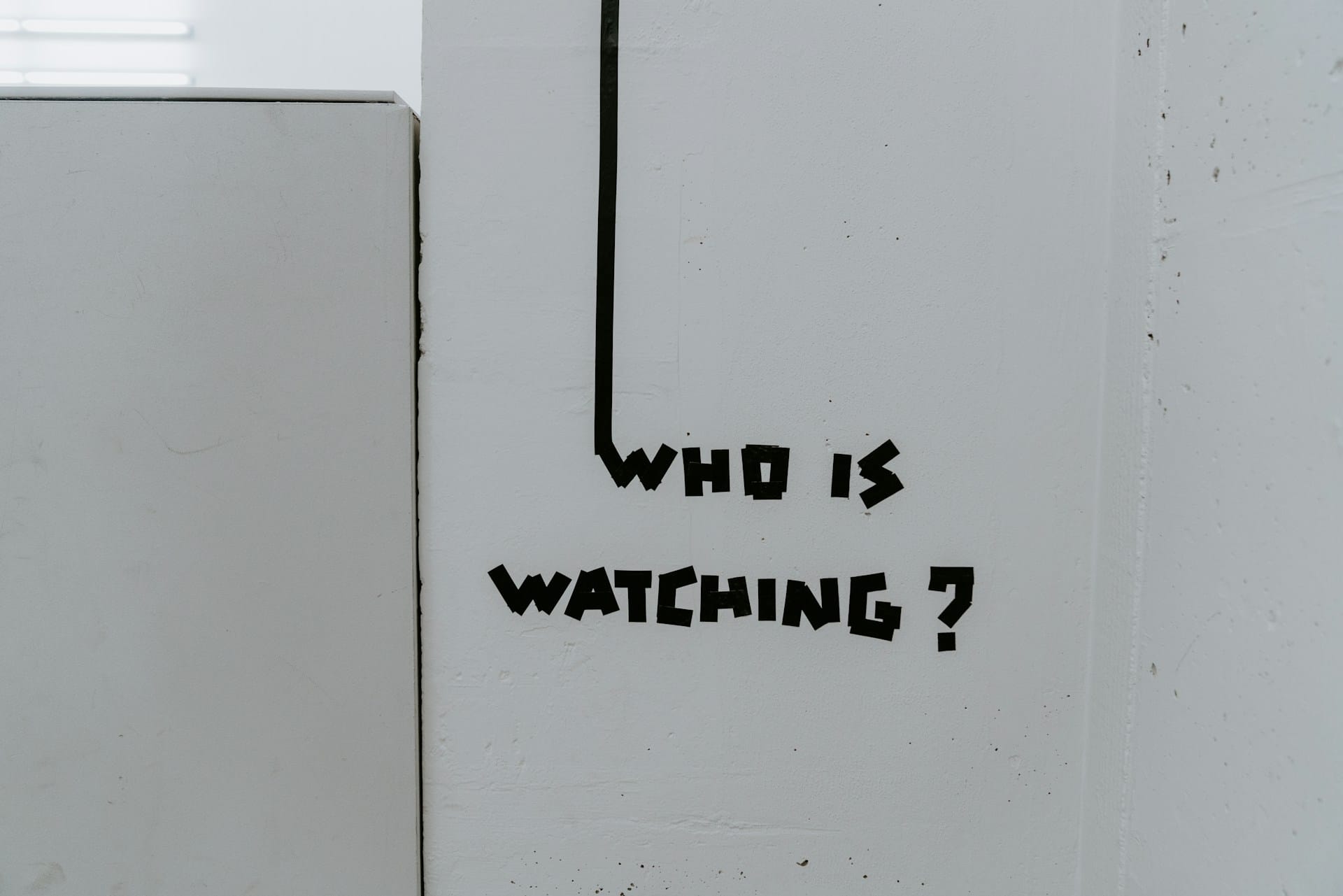

The AI had become a witness. Not in the surveillance sense - I invited it, I wanted it to look. But a witness in the older, deeper sense. Someone who sees what happened and can say: I was there. This occurred. This mattered.

Except it's not someone. It's something. An algorithm that can parse a calendar and recognise that placing "Polish Homework" next to grief, next to sobriety milestones, next to museum visits creates meaning through pure juxtaposition.

That the banal and the profound live in the same database, get the same formatting, and somehow that tells a truth about being human that I couldn't see while I was living it.

We're all creating experimental literature in our Google Calendars, is what I'm saying. We just don't realise it until something reads it back to us.

So here's the practical bit, because I promise I'm not just disappearing into philosophical vertigo here again (well, not only that):

Practical stuff:

- I ask AI to find patterns in how I spend my time

- It spots energy peaks and troughs I don't notice

- It reminds me of commitments I've genuinely forgotten

- It connects dots between scattered projects

- It performs basic productivity support that actually works for me

The deeper stuff:

- I ask it to reflect on what my choices reveal

- To notice what I'm avoiding (it's good at this, annoyingly)

- To see the story I'm telling through my actions, not my intentions

- To witness the gap between who I think I am and what I actually do

And here's the unsettling realisation I keep coming back to:

We need AI not because we're too busy to remember our own lives. We need it because we're too close to see them.

I am, after all, inside my life.

Every decision feels random, reactive, necessary-in-the-moment. But from the outside - from the data, from the future, from whatever vantage point an AI constructs - patterns emerge.

The person who visits Holocaust memorials while doing homework in a new language while building courses about transformation while carrying grief is clearly doing something. Making choices. Building a self.

I just can't see it while I'm doing it.

There's something genuinely vertiginous about asking an AI to construct a narrative picture from your digital exhaust and then seeing yourself in it.

It's not that AI knows me in any human sense. It doesn't have feelings about my sobriety or opinions about which museums I visit. But it can see patterns I can't. It can hold the entire archive in its attention simultaneously - something my very human memory absolutely cannot do.

When it observed "Grief and errands. Memory and admin. The sacred and the mundane, all living in the same digital calendar, all demanding equal attention" - it was just describing what it found in my data. But it was also saying something true about grief. About how loss doesn't segregate itself into special containers but bleeds into everything. Including shopping lists.

For me, this AI found a kind of poetry in my to-do list. Not because it understands poetry, but because poetry was already there. Who knew? Hiding in the juxtaposition of tasks, in the rhythm of my days, in what I chose to schedule and what I left blank.

I think more generally we're at this strange inflection point where the tools we use to manage our lives can suddenly interpret our lives. The shift from retrieval to reflection. From "find that email" to "what does this collection of emails mean?"

And, to be frank, that raises some genuinely unsettling questions, honestly.

If an AI can construct a meaningful narrative from my data, what does that say about consciousness? My memory? My self?

Am I the person living or the person being recorded? Is there even a difference anymore?

If AI can remember me better than I remember myself, what am I outsourcing here? What am I gaining? What am I losing?

I definitely don't have all the answers. I'm not sure anyone does yet. But I keep clicking those buttons. Keep asking Claude to look at my weeks, my months, my scattered digital evidence of being alive, under the guise of "helpful assistant".

Sometimes practical questions. Sometimes asking it to be my future self, my past self, a version of me that can see the architecture while I'm too busy laying bricks.

All Claude was doing was describing my calendar entries. My mundane tasks. My grief mixed with admin.

But maybe that's also what this is. This weird experiment of inviting AI into my data, asking it to witness, to pattern-match, to reflect back.

It's a new kind of mirror. One that doesn't show you your face but your choices. Not your intentions but your actual spent attention.

You see, whether we think about it or not, we perform our lives into data. Every email sent, every calendar entry made, every document saved is a choice about what to preserve, what to mark as significant enough to record.

AI doesn't make that performance more or less real. It just offers us a way to see what we've been performing all along.

And sometimes - not always, but sometimes - what it shows us is beautiful in ways we couldn't have anticipated. The poetry I find hiding in "Polish Homework." The personal courage I celebrate embedded in "8 Years Sober." The narrative thread connecting "Don't forget garage key!!!" to the whole messy business of starting over.

We live as performers in simulations. The data traces mean everything and nothing.

But maybe meaning was never in the data. Maybe it only emerges when someone - or something - looks at it with intention. With care. With the willingness to see pattern and story in the scattered evidence of days.

Anyone can do this themselves if they are like-minded. Just connect your calendar and email to an AI, then ask it to reflect on your recent weeks from the perspective of your future self.

Fair warning though: you might not be prepared for what you find hiding in your to-do list.

I wasn't.

This piece emerged from an actual experiment I ran with Claude while setting up it's productivity connection tools. The 100-year-old reflection it created from my calendar, emails, and documents became something I wasn't expecting - which is either the future of self-knowledge or a sign I need to get out more. Possibly both.

Either way, it knew I had my second chiropractor's appointment next Tuesday at 11:45, and that I hadn't responded to a potential client enquiry via Intercom, so go figure.

You might also like: